Claude Skills vs. MCP: A Practical Comparison

TL;DR: Both are powerful, but they're different! Skills are simple and efficient, whereas MCP is better for connecting to 3rd party data.

Two weeks ago, Anthropic launched Claude Skills, short instructions or code scripts you can use to perform a specific task within Claude.

Anthropic’s example Skills range from creating PowerPoints to referencing brand guidelines to spinning up MCP servers. You can access them via Settings → Capabilities:

In this post, I’ll cover what Skills are, why people are excited, how they compare with MCP servers, and what I think this means strategically going forward.

What are Skills?

In practice, Skills are markdown files (formatted text) that give the LLM specific instructions. They can also include small code snippets to perform actions.

You can think of Skills as a replacement for a few different workflows:

Copy-pasting prompts

Instead of copy-pasting a prompt, you can turn it into a Skill and invoke it by name.

Adding the same context repeatedly

The “brand guidelines” Skill is a good example. Instead of re-uploading your brand rules every time, you can put those instructions into a Skill and reference them dynamically.

Running code to manipulate files or call APIs

Since Skills can include code, they make it easy to automate tasks that are trivial with code but difficult without it. A great example of this might be converting between file types to create images or PDFs.

The third use case is the most interesting to me personally, because it opens up the widest range of possibilities!

Example Skill Use Cases

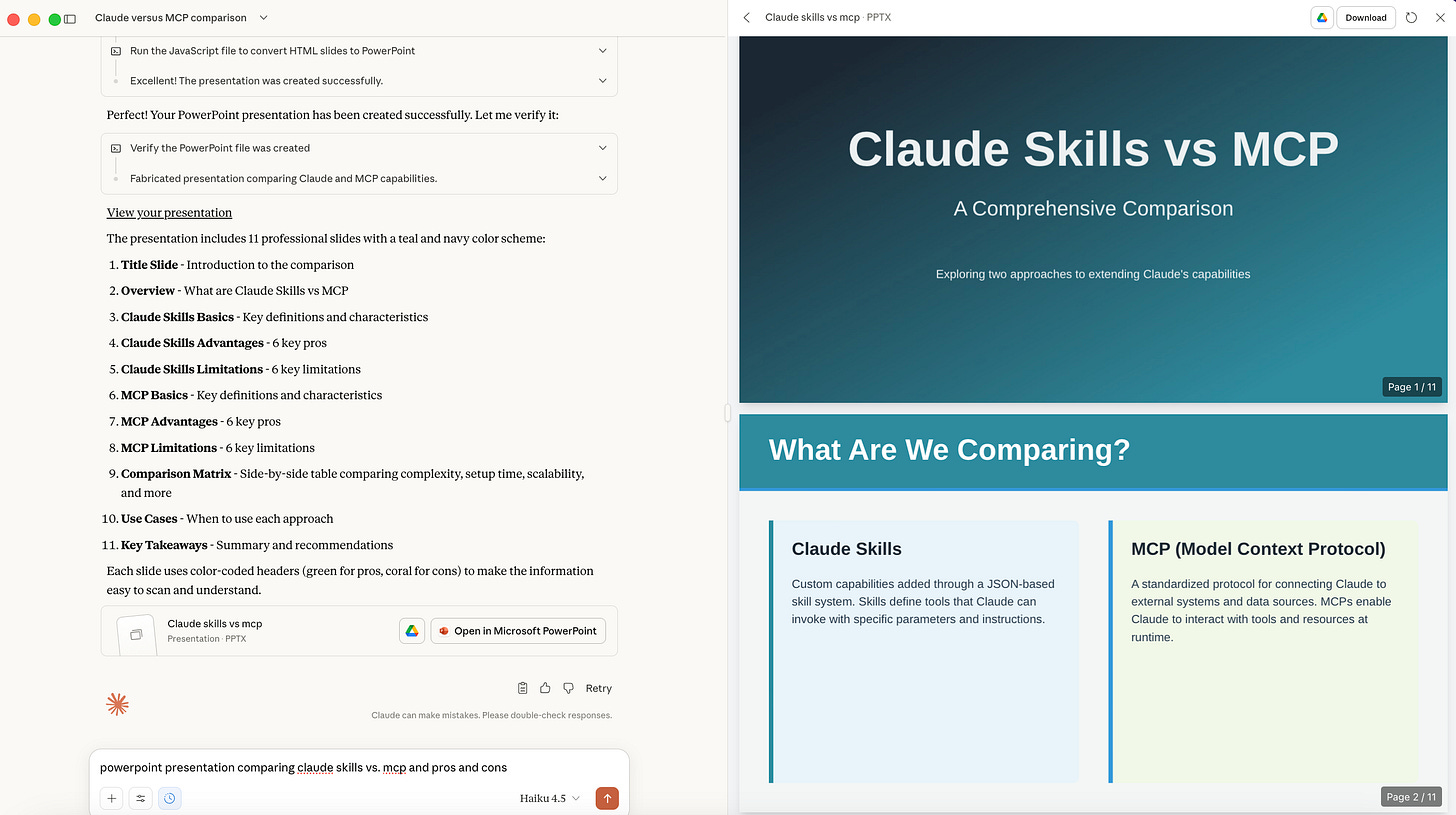

Of the built-in Skills, I’ve been most impressed by the PowerPoint creation one. I’ve tried a lot of AI slide apps (Gamma, Canva, etc.), and the results usually look artificial or amateur.

In contrast, this is pretty legit. It just looks like a powerpoint ‘should’ look in the world of business:

When downloaded, the PowerPoint is also easily editable. Details matter: the colored accents are shapes, not images, so I can adjust the colors, sizes, and styles styles if I so choose.

It’s also really easy to build your own Skills (via the incredibly meta skill-builder-skill).

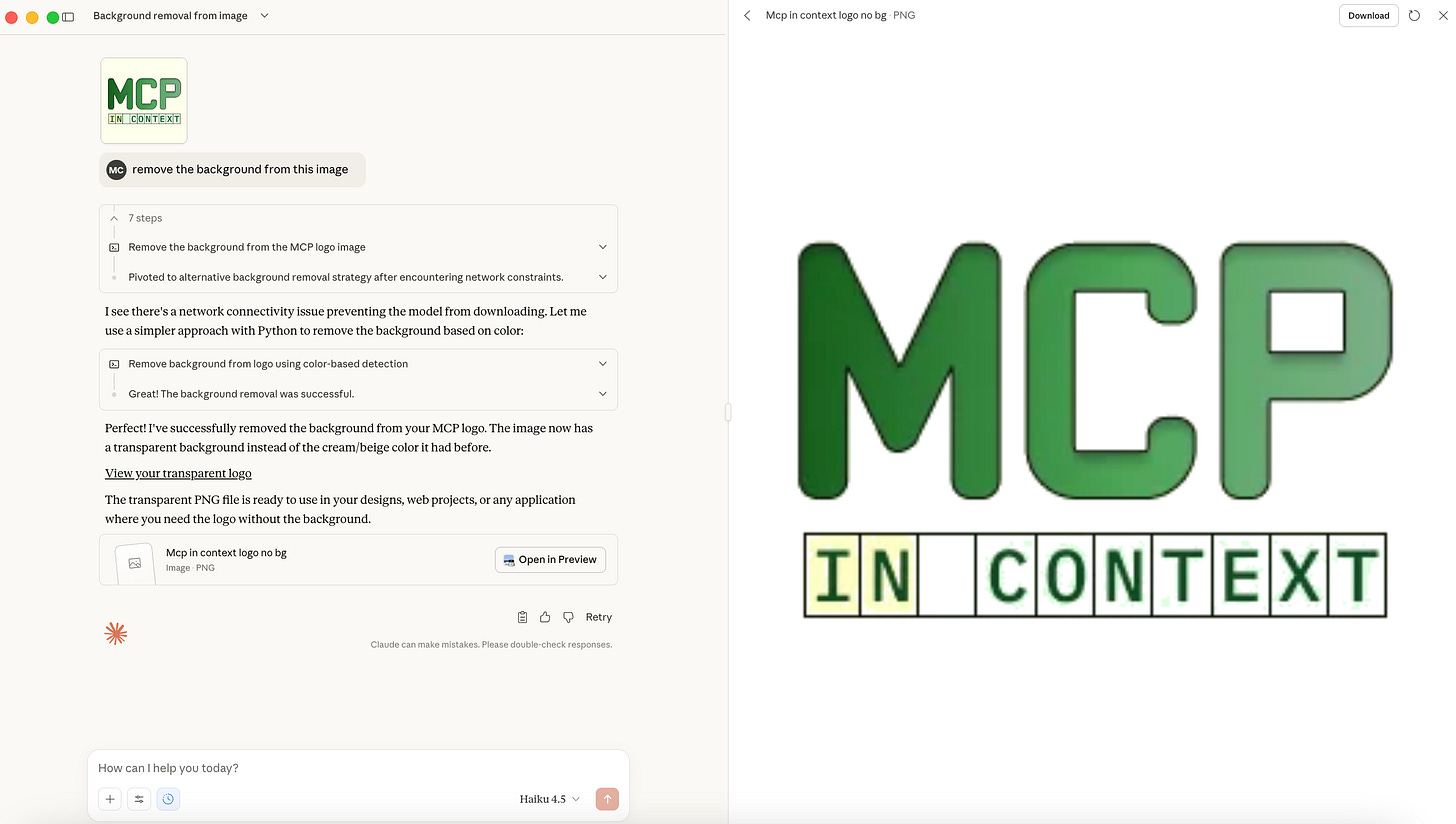

I built a background remover Skill, inspired by remove.bg. Now Claude can strip backgrounds from images, in just a few seconds:

Skills vs. MCP Servers

So, how do Skills compare to MCP servers?

Well, here’s the presentation slide that Claude created on this topic:

My summary:

MCP servers are better for accessing and working with data from third-party companies within an LLM. Skills can’t really do this right now.1

MCP servers have also been around longer, are open-source tech, and have now been adopted by all the major model companies. There are also a huge range of available MCP servers, including many official ones maintained by companies. For now, Skills are specific to Anthropic/Claude (although the concept is highly replicable for other firms) and there are far more limited options.

Skills are much better than MCP servers for specific, repetitive tasks that don’t involve data from third-party companies. You can create your own and start using them in minutes; MCP servers tend to be more complex.

Skills are very, very token efficient. While this isn’t captured in the slide above, it’s a very important consideration that we’ll unpack momentarily.

To summarize, MCP servers lead in enterprise data access. Skills win for personal workflows and lightweight automation.

I suspect I’ll use Skills as much or more than I use MCP servers going forward, and I hope OpenAI launches something similar soon!

An Aside on Token Efficiency

One of the main critiques of MCP servers is their context consumption.

Context is very important: it gives the model the information and capacity it needs to make informed decisions, incorporate third party data, use tools, and more. But, context is expensive.

Context requires compute.

More context means more tokens. More tokens requires more compute and more electricity. Compute and electricity are resource-constrained and expensive (and those costs are passed along to companies who use the models), so context efficiency really matters.

Context is finite.

Every LLM has a specified context window, which is the maximum amount of content that the model can ‘keep in mind’ at one time.

Too much context can lead to ‘context rot,’ where the LLM starts to get confused and overwhelmed by the sheer amount of info.

As a result, context efficiency is often both cheaper and more performant.

Why Skills are More Token Efficient

MCP servers often bloat context because companies turn APIs directly into tools without considering how people will actually use those tools. Also, all of the functionality (like OAuth login) that is valuable for Enterprise data sharing doesn’t come for free; it takes up tokens.

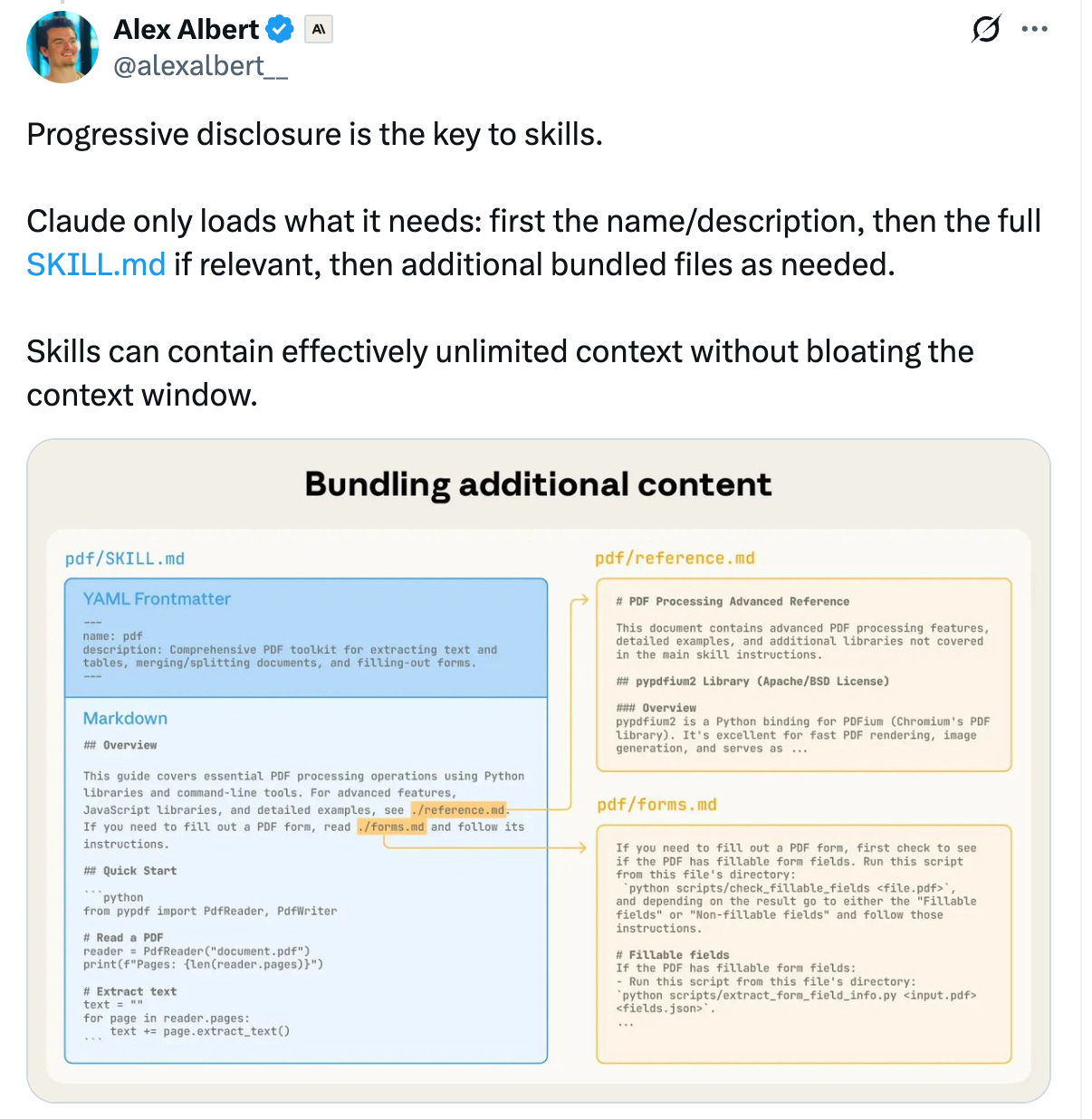

Skills, on the other hand, are built to be lightweight. They let the LLM progressively access only the context it needs, keeping things efficient:

The tradeoff: less enterprise-grade functionality. But for most users, that’s a great trade to get custom automation without cost or bloat.

For more on this topic, I highly recommend Simon Willison’s blog post, “Claude Skills are awesome, maybe a bigger deal than MCP.”

Zooming Out

Strategically, I find it fascinating that Anthropic open-sourced MCP but kept Skills proprietary to Claude.2

Anthropic’s roadmap for Skills indexes heavily on making them valuable for teams and companies, for example via sharing across organizations. That aligns with Anthropic’s clear Enterprise focus (vs. OpenAI’s more consumer-led approach), as well as with the examples of skills they’ve created thus far (e.g. for brand guidelines). If Anthropic wants to win for work use cases, it makes sense that they don’t want to share their latest innovation.

In practice, Skills adoption seems to be moving somewhat slowly, though that’s not incredibly surprising. MCP had a year’s head start, and AI tooling in general is evolving faster than users can keep up.

That said, I think Anthropic has also missed the mark so far by not introducing any sort of Skill directory. Right now the closest equivalent is a GitHub repo, which doesn’t work for most audiences. A true Skill marketplace with how-to blogs or demos showing what people can create would be incredible; people just haven’t yet explored the range of what’s possible.

I’m excited to continue to try out Skills and explore new use cases; if anyone has found ones they find valuable (either via existing Skills or ones you’ve created), would love to hear about them!

While Skills can do this to some extent, Notion is the only third-party company that I’ve seen publish their own Skills, and the Notion Skills require you to also have Notion’s MCP server to facilitate data access. The Skills then help teach Claude manipulate the data from the Notion MCP server into nice summaries or new documents. While Skills could theoretically call third party APIs, you run into issues quickly due to lack of support for OAuth, or it not being clear how you would securely store an API Key.

Anthropic wrote a lovely and very comprehensive tech blog about how Skills works, and a different model provider could easily provide functionality for users to repurpose existing Skills (markdown snippets + scripts) alongside their LLMs. However, replicability aside, the posture is very different from an open-source protocol with its own third-party documentation site and a group of maintainers that span an array of tech firms.

Im not shocked Anthropic open sourced MCP and not Skills because I think if they didnt open source MCP there's zero chance it would get adopted and whatever open-source alternative was best would be. When I was at Google and they opensource Tensorflow, Jeff Dean (superstar engineer) said that it was embarassing when Google released Bigtable and had to implement "industry standard HBase API" , which was annoying to twist your product to a different API, and HBase was based on the Bigtable whitepaper. But usually in things like libraries and tools that are very centered around devs, open-source will win. Skills is a bit more of a Claude feature than any sort of protocol or standard, as your post points out, though of course there is a shade of grey to all these things.